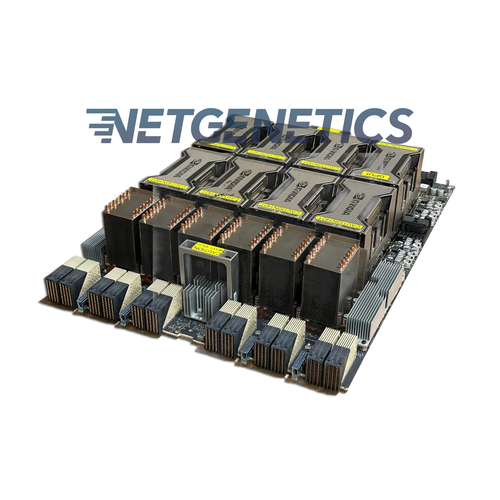

NVIDIA DGX A100 AI Deep Learning Server 8x A100 SXM4 80GB

The NVIDIA DGX A100 AI Deep Learning Server is a powerful, purpose-built platform designed to accelerate AI, machine learning, and high-performance computing workloads. Featuring eight NVIDIA A100 Tensor Core GPUs connected by high-speed NVLink, DGX A100 delivers exceptional performance for training and inference at scale. Ideal for data centers, research institutions, and enterprise AI deployments, this system provides optimized hardware, software, and management tools to streamline development and maximize productivity for the most demanding AI applications.

| Specifications - Nvidia DGX A100 |

|

|---|---|

GPUs |

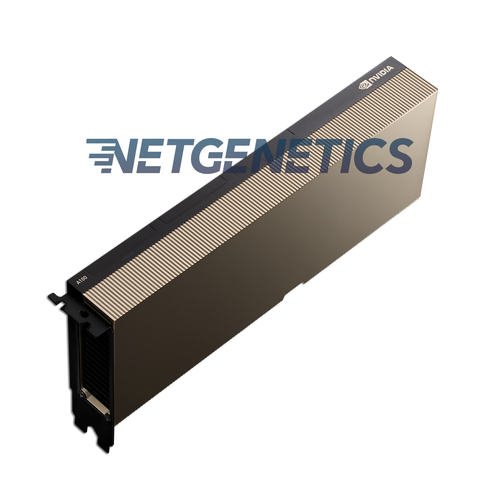

Eight Nvidia A100 Tensor Core GPU SMX4 80GB |

GPU Memory |

640GB total |

NVIDIA NVSwitches |

6 |

| CPU | 2× AMD EPYC 7742, 128 cores total, 2.25 GHz (base), 3.4 GHz (max boost) |

System Memory |

2TB 2933 ECC DDR-4 |

| System OS | No OS Installed |

| Storage OS | 2× 1.92TB M.2 NVMe drives |

| Internal Storage | 30TB (8× 3.84TB U.2 NVMe drives) |

| Networking | 6× Single Port NVIDIA Mellanox ConnectX-6 (200 Gb/s InfiniBand, 10/25/50/100/200 Gb/s Ethernet) 1× BCM – 1 LAN |

BMC (Out-of-band system management) |

1× 1 GbE RJ45 interface Supports IPMI, SNMP, KVM, Web UI, and Redfish APIs |

In-band System Management |

1× 1 GbE RJ45 interface |

| System Dimensions |

6U Rackmount Height: 10.4 in (264.0 mm) |

Operating Temperature |

5ºC to 30ºC (41ºF to 86ºF) |

System Power Usage |

6.5 kW max |

Power Supply |

Dual 220v |

| Included | Faceplate, Mounting Rails (No Hardware) |